A Technical Guide to Multi-Agent AI

Building the Sentient Supply Chain

The world of supply chain management is undergoing a seismic shift. I’ve seen the ‘before’ picture firsthand; years ago, I led an engineering team building a B2B Demand Planning Engine. While powerful for its time, it was a world of static, sequential processes that are simply no match for today's market volatility. The future now belongs to a new paradigm: a sentient, self-optimizing supply chain powered by a collaborative crew of AI agents. This isn't science fiction; it's the next evolution in enterprise automation, transforming the supply chain from a rigid set of operations into a responsive, digital nervous system.

For technical stakeholders, the question isn't why but how. This guide provides a condensed technical blueprint for designing, training, and deploying a sophisticated multi-agent system for autonomous supply chain management.

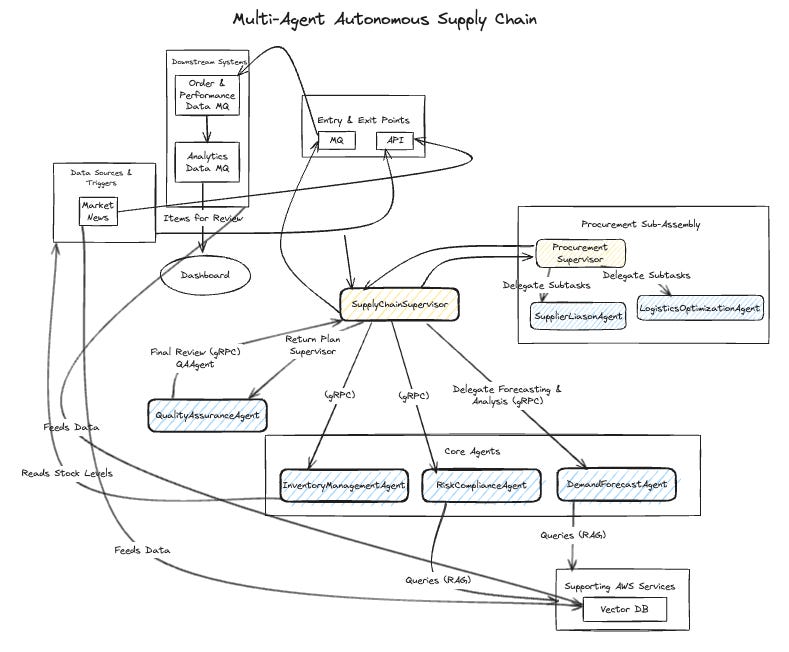

The Architectural Blueprint: A Hierarchy of Specialists

At its core, a multi-agent system tackles complexity by breaking down a massive problem into manageable tasks assigned to specialized agents. For a mission-critical process like supply chain management, a hierarchical architecture provides the necessary control, auditability, and alignment with business goals.

We use LangGraph, a framework for building stateful, agentic workflows, to orchestrate this hierarchy. A master SupplyChainSupervisor agent sits at the top, managing the global plan and delegating tasks to a crew of worker agents.

The workflow operates as a continuous, self-optimizing loop:

Demand Forecasting: The DemandForecastingAgent analyzes historical data and real-time market signals to predict future demand.

Inventory & Risk Analysis: The forecast is passed to the InventoryManagementAgent to check against current stock levels, while a RiskComplianceAgent scans for potential disruptions.

Procurement & Logistics: Based on this analysis, a nested team of agents manages supplier selection (SupplierLiaisonAgent) and plans optimal shipping routes (LogisticsOptimizationAgent).

Quality Assurance & Execution: A QualityAssuranceAgent reviews the final plan against a predefined rubric before the Supervisor executes the plan by interacting with external systems.

Continuous Monitoring: The system constantly monitors for new events, ready to trigger a re-planning cycle at a moment's notice.

Engineering the Agent Crew: Beyond Basic Prompting

The intelligence of this system comes from a crew of specialized agents, each strategically engineered for its role. We employ a mix of powerful frontier models, smaller fine-tuned models, and sophisticated training techniques.

1. The SupplyChainSupervisor (Orchestrator)

Role: The master controller of the LangGraph workflow.

Training Strategy: This agent is not fine-tuned. Its intelligence is procedural, defined by meticulously engineered prompts and the coded logic of the LangGraph state machine.

Model: A fast, capable model with excellent tool-use, like Llama 3 8B-Instruct or Gemini 1.5 Flash, is ideal for low-latency delegation.

2. The DemandForecastingAgent (The Analyst)

Role: Predict future demand by synthesizing historical data and market trends.

Training Strategy: A hybrid approach is used for maximum accuracy:

Fine-Tuning: A model like Claude 3.5 Sonnet or a fine-tuned Mistral 7B is trained on a dataset of historical sales data paired with ground-truth outcomes. This teaches the model the fundamental demand patterns of the business.

Retrieval-Augmented Generation (RAG): The agent is equipped with a RAG tool connected to a vector database containing market research reports, economic indicators, and news feeds. Before generating a forecast, it retrieves relevant, up-to-the-minute context, dramatically improving its accuracy and relevance.

3. The InventoryManagementAgent (The Decision-Maker)

Role: Monitor stock levels and decide when and how much to reorder.

Training Strategy: This agent is fine-tuned to be a specialized decision engine.

Fine-Tuning: A smaller model like Llama 3 8B is trained on a dataset of inventory scenarios. Each data point consists of inputs (current stock, demand forecast, lead times) and the optimal output action (e.g., { "action": "CREATE_PURCHASE_ORDER", "quantity": 5000 }), derived from established inventory formulas like Economic Order Quantity (EOQ). This transforms the LLM into a reliable, cost-effective tool for a specific, repetitive task.

4. The QualityAssuranceAgent & The Human-in-the-Loop (The Guardian)

Role: The critical junction for ensuring trust and accuracy. This agent reviews the system's proposed plans and flags anomalies for human review.

Training Strategy: The focus here is on the process, not the model.

LLM-as-Judge: A powerful frontier model like GPT-4o is given a detailed, expert-defined rubric. It is prompted to evaluate the final plan against this rubric, providing scores and justifications. If any criterion fails, the plan is automatically flagged.

Human-in-the-Loop (HITL): Flagged plans are sent to a human expert via a dedicated UI. The expert's role is twofold: 1) to make the final decision and correct the plan, and 2) to provide structured feedback on why the plan was flawed.

The Feedback Loop: This expert feedback is invaluable. Each correction becomes a high-quality data point that is fed back into the fine-tuning datasets for the other agents. This creates a virtuous cycle where the system learns directly from expert oversight, continuously improving its accuracy and reliability over time.

Production Deployment on AWS

An enterprise-grade agentic system requires a scalable and resilient infrastructure. Our blueprint leverages a container-based approach orchestrated with Kubernetes.

Containerization: Each agent is packaged as a microservice using Docker and stored in Amazon Elastic Container Registry (ECR). This isolates dependencies and allows for independent scaling.

Orchestration: Amazon Elastic Kubernetes Service (EKS) manages the container lifecycle. We use a multi-node group strategy:

CPU Node Group: For standard services like the API gateway and message brokers.

GPU Node Group: For the agent services that require GPU acceleration (e.g., DemandForecastingAgent), using instances from the P3 or G5 families.

Autoscaling: We use Karpenter, an AWS-built cluster autoscaler, to provision right-sized EC2 instances (including GPUs) on-demand, optimizing both performance and cost.

Communication: A hybrid model is used. Low-latency internal requests between agents use gRPC, load-balanced by an Application Load Balancer (ALB). Asynchronous handoffs to external systems use a managed message broker like Amazon MQ for RabbitMQ for resilience.

The Path Forward

Building a multi-agent system is a journey from automation to autonomy. By combining hierarchical control, specialized agent training, and a robust human-in-the-loop validation process, we can construct a supply chain that doesn't just execute commands but senses, reasons, and adapts. This is the foundation for creating a truly sentient supply chain—one that is not only more efficient and resilient but also a powerful competitive advantage in an increasingly unpredictable world.

Are there good reference projects for multi agent system that I can refer and learn?